Quick summary:

- I compared the Mevo ($400) to the current gold standard of doppler radar launch monitors, the Trackman 4 ($19,000)

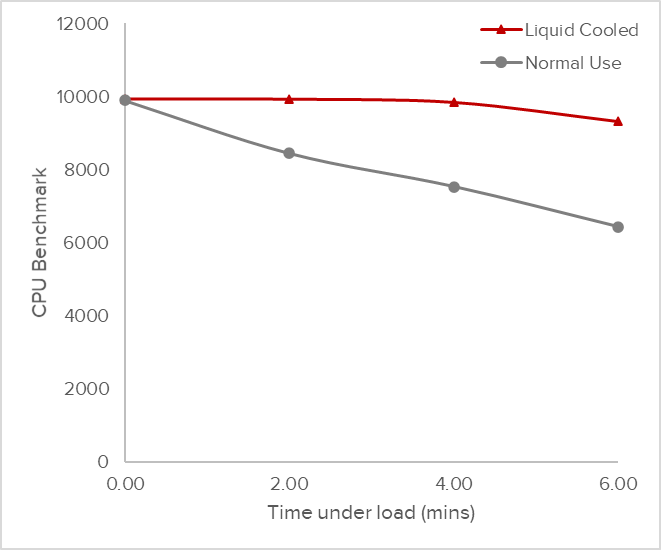

- The Mevo performs extremely well for ball speed (on any given shot, less than 1% off)

- The Mevo performs very well for club speed (usually less than 3% off)

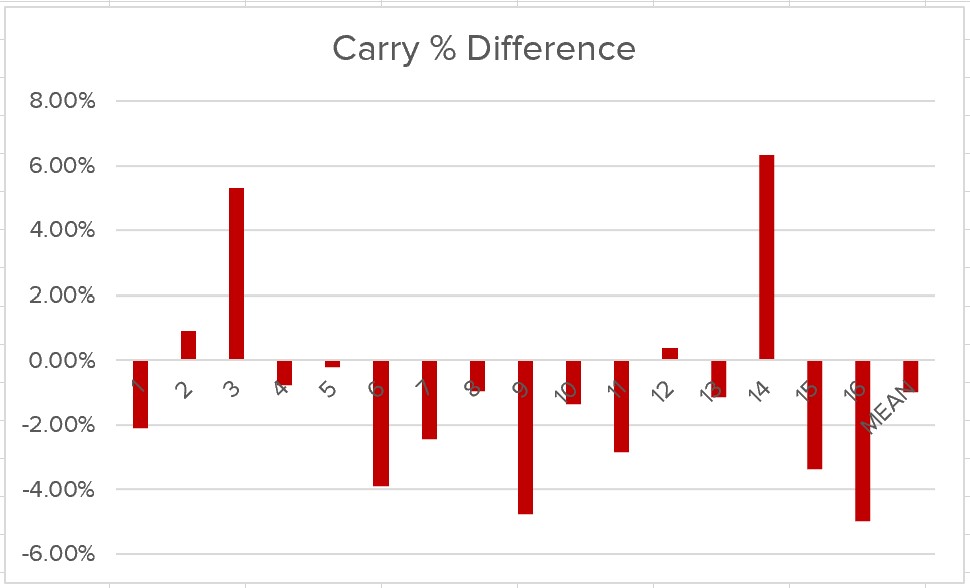

- The Mevo performs pretty well for carry (usually less than 5% off)

- Spin and launch angle are highly erratic and are almost unusable (20-60% off)

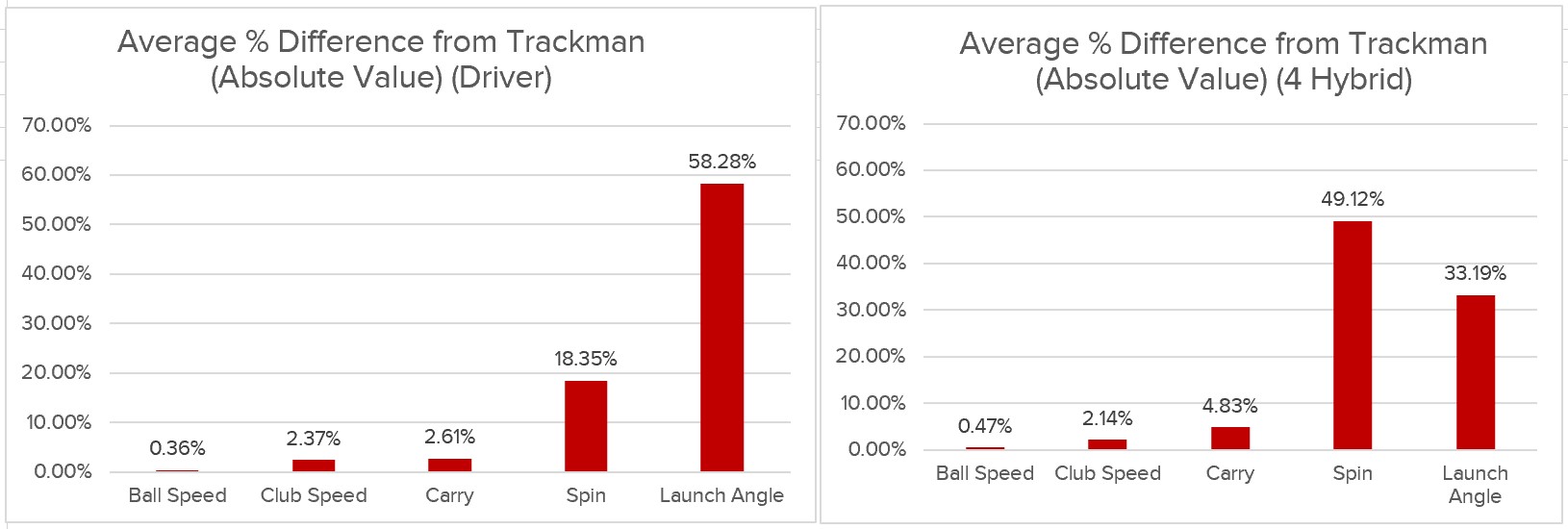

- Below are the simplest and fastest graphs to summarize the accuracy:

Overview, methods:

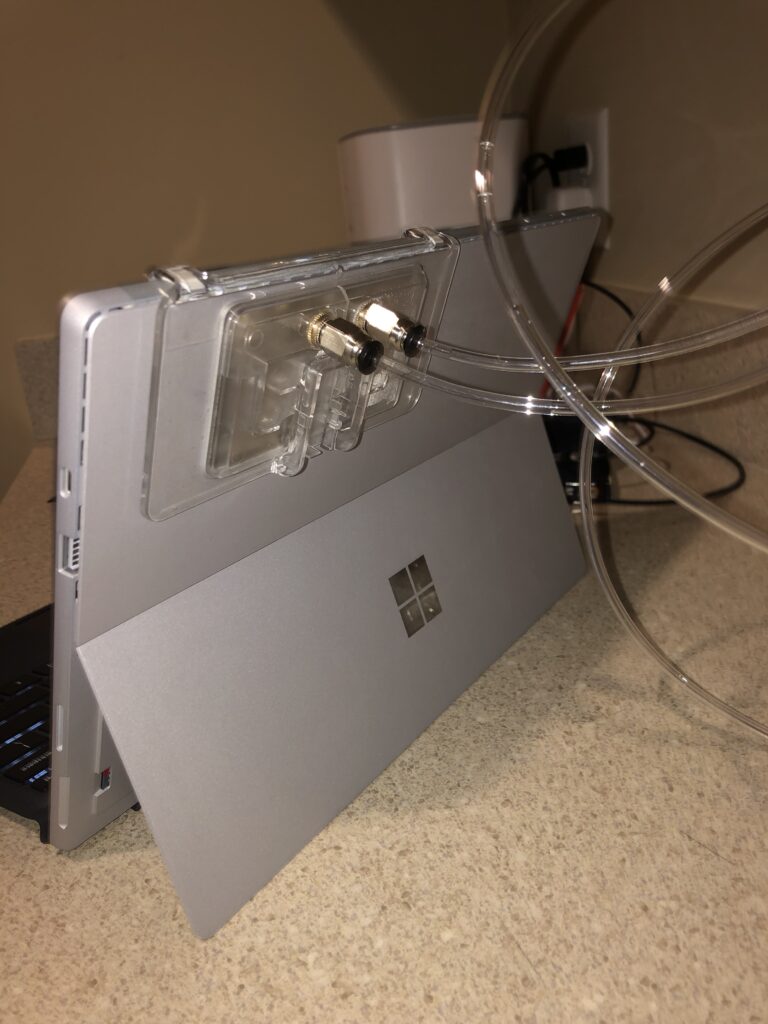

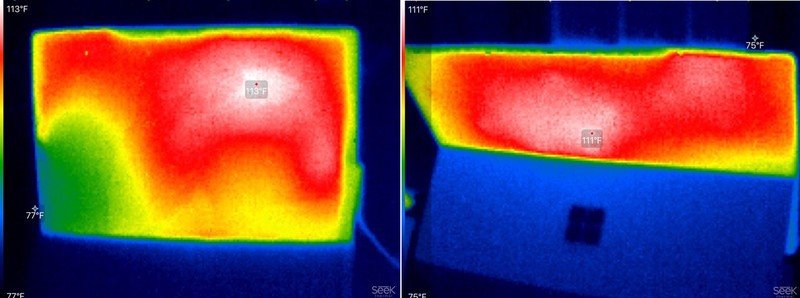

The Mevo ($450) is a portable radar launch monitor, which uses Doppler radar to measure different parameters of a golf shot. There is a wide variety of launch monitors at various price points, but at the top end for radar models is the Trackman 4 (starting at $18,995), which is the gold standard I used for these tests. Owning one is impractical for almost everyone outside of pros and coaches, but thankfully they can be rented for $30/hour where I live. I spent a couple sessions hitting on one and comparing its read of each shot to the Mevo. Both devices were calibrated for the altitude I usually play at in Akron (1,000 ft. above sea level).

Data was collected indoors with 8 feet of unobstructed ball flight. Reflective metallic stickers were used on each ball to improve accuracy, per the recommendations of Flightscope. I used Taylormade TP5x’s for all shots. I include “2022” in the title because a disgusting amount of data interpretation happens behind the scenes with these companies and their proprietary calculations, and Flightscope’s firmware updates improve accuracy even as the hardware remains the same.

I think it’s realistic to break this down to two parts – accuracy for individual shots, and accuracy for club gapping purposes (i.e., looking at averages for 5-10 shots with each club). For the latter, if someone hits 10 shots with each club, the individual variation doesn’t matter as much so long as the averages are close to the true (Trackman) averages. So for individual shots, I’m going to avoid using “average % error” as that could smooth out any nuance if the Mevo mixes over-reads with under-reads – it would improperly return a value very close to zero. I’ll use the absolute value of % difference on each shot. I’ll show the % difference for individual shots for a couple sets of data (there is too much data for this to be practical for each shot and club). For session averages, these will be true averages.

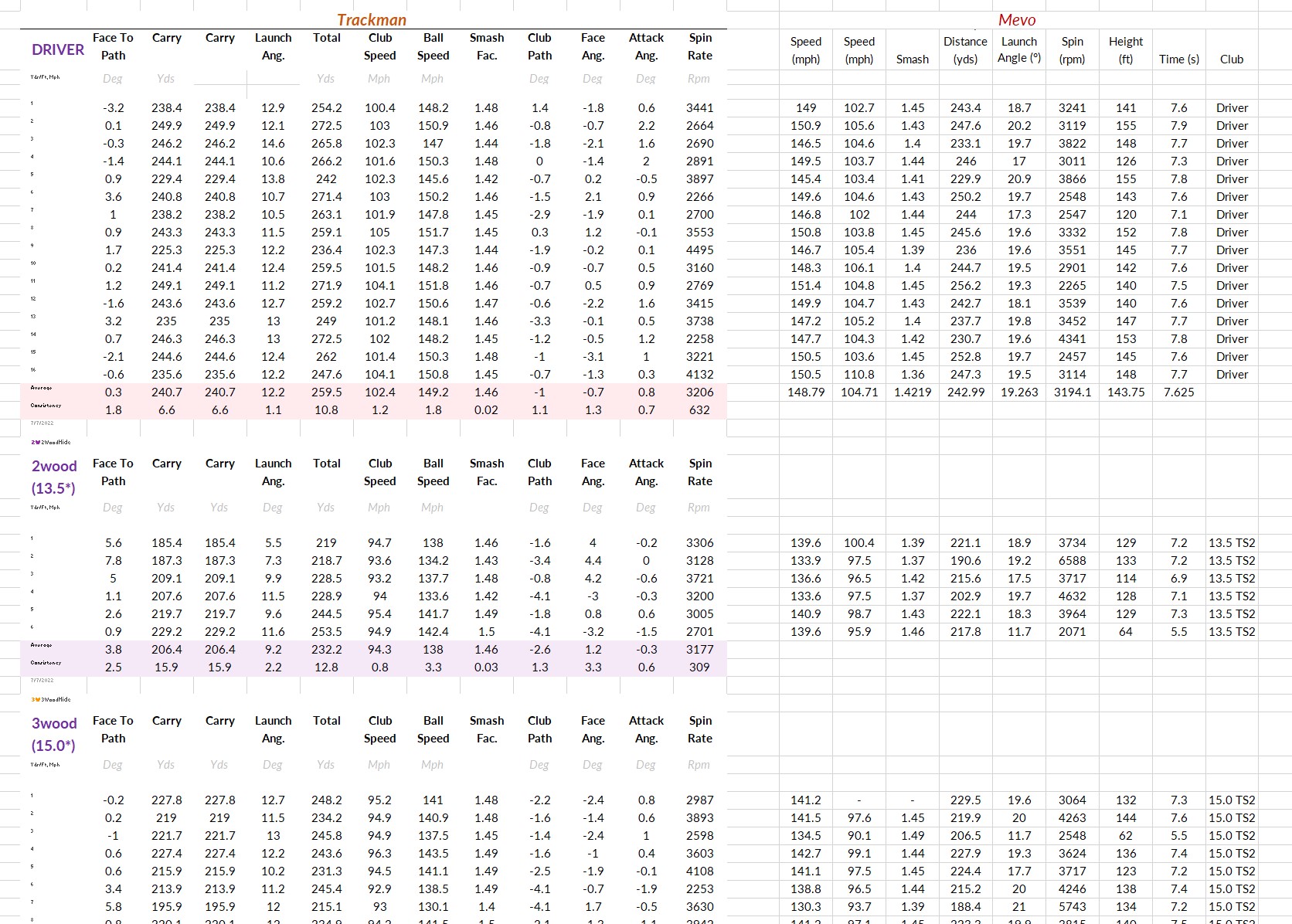

Below is some of the raw data after I pasted everything from both Trackman and Flightscope’s websites to Excel:

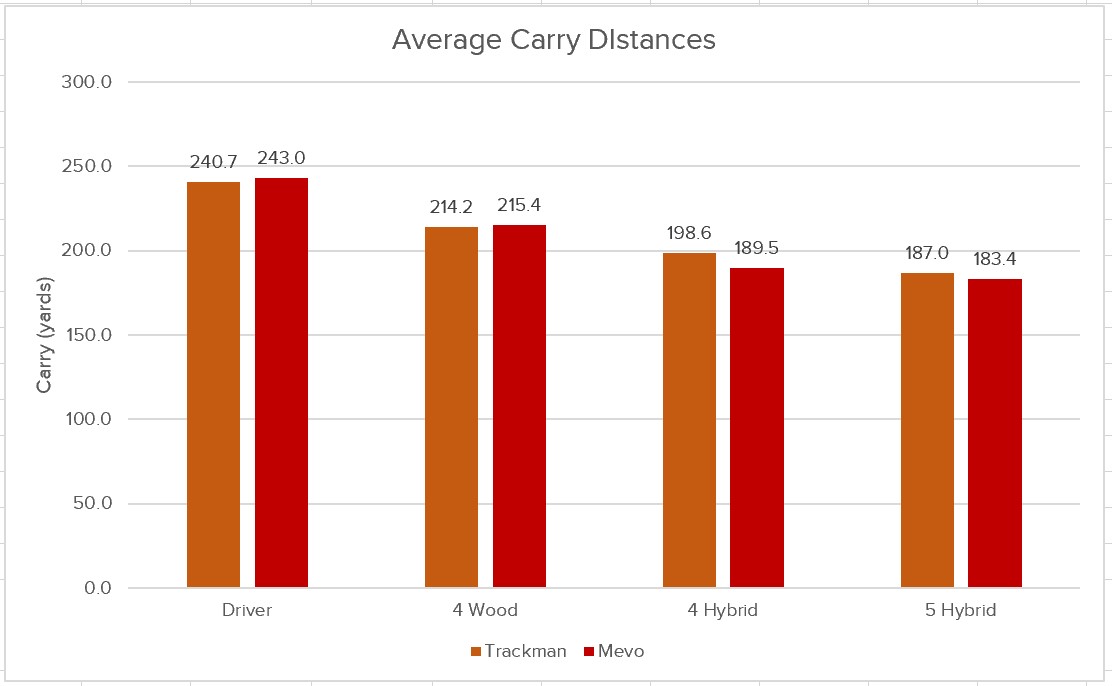

Results: Comparing session averages (5-15 shots with each club)

Results: Comparing the parameters for individual shots:

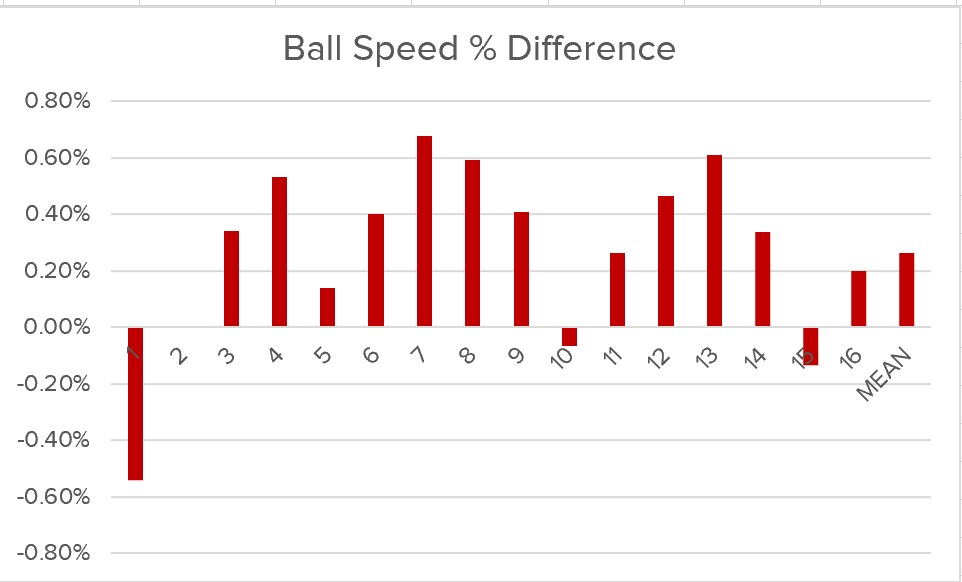

For 16 swings of a driver, I compared the ball speed (mph) that Trackman measured and the ball speed that Mevo measured. Below is the percent difference from Trackman’s speed that Mevo’s measured on these 16 individual swings. The Mevo never missed a shot and never differed by more than 0.8%. On average, it slightly misread on the high side. It is important to point out that the differences here are extremely minute – the session average on Trackman was 149.2mph, whereas Mevo’s was 148.8mph. This difference is too small to have any actionable impact, even on a professional fitting.

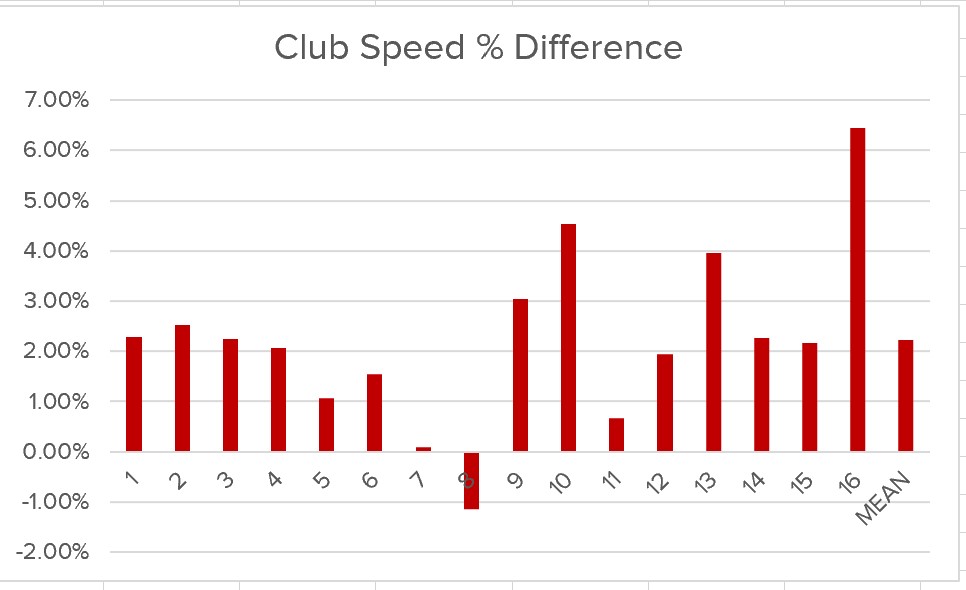

Below is the same data for the other parameters:

Results: Average difference from Trackman (Abs):

As discussed above, I think using the absolute value of the difference from Trackman on each individual shot is a fair way to gauge “how far from the true value might this be?” when you are using your Mevo on the range and receive a carry distance or ball speed. So below is the average of this difference on each shot:

Discussion:

Mevo performed admirably given the price point and its competitors. Its ball speed measurements are effectively indistinguishable from Trackman. At 160mph, a 0.5% difference would be reading 159.2-160.8mph, which is not significant enough for anyone to care about, outside of robotic equipment testing. Club speed is largely consistent, if slightly more inaccurate. Launch angle was consistently over-estimated. Spin was highly erroneous. Carry is influenced by spin and launch, and poorly read spin numbers influenced carry distance at times. To give an example, here is a tale of two reads with a 4 Hybrid shot that I struck thin:

- Trackman: 127.2mph, 14.8* launch, 3300 spin → 199.1 yard carry

- Mevo: 127.1mph, 20.8* launch, 7250 spin → 175.6 yard carry

The gruesomely over-estimated spin and launch lead to a low carry distance, even though Mevo nailed the ball speed.

I think the best use of the Mevo is if you have some sort of baseline familiarity with your launch monitor numbers, particularly ball speed. For people trying to build swing speed, ball speed is an important parameter to watch when using your driver. And the Mevo’s ball speed is effectively indistinguishable from your true (Trackman) ball speed. If Mevo reads a spin of 6,000rpm on a well struck driver shot, it is useful to be able to say “I know that’s not true” and throw out the carry distance, which would be skewed, while understanding the ball speed is still fine to interpret.

Limitations:

Trackman isn’t infallible, and even though it was treated as the ‘true’ values, it’s only an estimate. In indoor settings some professionals prefer photometric launch monitors (e.g., GC Quad). Its true strength lies in outdoor use where it can track the full flight of a shot. As these tests were performed indoors, they are limited. However Trackman likely represents the technical limitation of Doppler radar launch monitors as of 2022, and my goal was to see how close a $400 device can come to this standard.

I also wonder how the prescence of more than one radar device affects reading. These units are effectively floodlights, however instead of visible light from a bulb, it’s longer radio waves emitted from an antenna (or in the case of Trackman, an array of multiple antennae at different frequencies). I wonder if the additional radiation from Trackman would actually improve the performance of the Mevo, which is normally limited by its small size and its meager power as a battery-operated device. If anyone has experience in signal processing and has insight… feel free to email me!

I will add more data with irons this fall as I have time.